Lettrism (pronounced let-riz-uhm)

A French avant-garde art and literary movement

established in 1946 and inspired, inter alia, by Dada and surrealism. The coordinate term is situationism.

1946: From French lettrisme,

a variant of lettre (letter). Letter dates from the late twelfth century

and was from the From Middle English letter

& lettre, from the Old French

letre, from the Latin littera (letter of the alphabet (in

plural); epistle; literary work), from the Etruscan, from the Ancient Greek

διφθέρᾱ (diphthérā)

(tablet) (and related to diphtheria). The form displaced the Old English bōcstæf (literally “book staff” in the

sense of “the alphabet’s symbols) and ǣrendġewrit

(literally “message writing” in the sense of “a written communication longer

than a “note” (ie, something like the modern understanding of “a letter”)). The –ism suffix was from the Ancient Greek

ισμός (ismós) & -isma noun suffixes, often directly,

sometimes through the Latin –ismus

& isma (from where English picked

up ize) and sometimes through the French –isme

or the German –ismus, all

ultimately from the Ancient Greek (where it tended more specifically to express

a finished act or thing done). It

appeared in loanwords from Greek, where it was used to form abstract nouns of

action, state, condition or doctrine from verbs and on this model, was used as

a productive suffix in the formation of nouns denoting action or practice,

state or condition, principles, doctrines, a usage or characteristic, devotion

or adherence (criticism; barbarism; Darwinism; despotism; plagiarism; realism;

witticism etc). Letterism is listed by some sources as an alternative

spelling but in literary theory it used in a different sense. Lettrism and lettrist are nouns; the noun

plural is letterists.

A Lettrist

was (1) one who practiced Lettrism or (2) a supporter or advocate of Lettrism. Confusingly, in the English-speaking world, the spelling Letterist has been

used in this context, presumably because it’s a homophone (if pronounced in the

“correct (U)” way) and the word is “available” because although one who keeps

as diary is a “diarist”, even the most prolific of inveterate letter writers

are not called “letterists”. The

preferred term for a letter-writer is correspondent, especially for those who

writes letters regularly or in an official capacity. The Letterist International (LI) was a

Paris-based collective of radical artists and cultural theorists which existed 1952-1957

before forming the Situationist International (SI), a trans-European,

unstructured collective of artists and political thinkers which eventually

became more a concept than a movement. Influenced

by the criticism that philosophy had tended increasingly to fail at the moment

of its actualization, the SI, although it assumed the inevitability of social

revolution, always maintained many (cross-cutting) strands of expectations of

the form(s) this might take. Indeed,

just as a world-revolution did not follow the Russian revolutions of 1917, the

events of May, 1968 failed to realize the predicted implications; the SI can be

said then to have died. The SI’s

discursive output between 1968 and 1972 may be treated either as a lifeless

aftermath to an anti-climax or a bunch of bitter intellectuals serving as mourners

at their own protracted funeral. In

literary theory, while “Lettrism” has a defined historical meaning, the use of

“letterism” is vague and not a recognized term although it has informally been

used (often with some degree of irony) of practices emphasizing the use of

letters or alphabetic symbols in art or literature and given the prevalence of

text of a symbolic analogue in art since the early twentieth century, it seem

surprising “letterism” isn’t more used in criticism. That is of course an Anglo-centric view of

things because the French Lettrists themselves are said to prefer the spelling

“Letterism”.

Jacques Derrida smoking pipe.

The French literary movement Lettrism was founded in

Paris in 1946 and the two most influential figures in the early years were the Romanian-born

French poet, film maker and political theorist Isidore Isou (1925–2007) and his

long-term henchman, the French poet, & writer Maurice Lemaître (1926-2018). Western Europe was awash with avant-garde

movements in the early post-war years but what distinguished Lettrism was its

focus on breaking down (deconstruction was not yet a term used in this sense) traditional

language and meaning by emphasizing the materiality of letters and sounds

rather than conventionally-assembled words. Scholars

of linguistics and the typographic community had of course long made a study of

letters, their form, variation and origin, but in Lettrism it was less about

the letters as objects than the act of dismantling the structures of language letters

created, the goal being the identification (debatably the creation) of new

forms of meaning through pure sound, visual abstraction and the aesthetic form

of letters. Although influenced most by Dada

and surrealism, the effect the techniques of political propaganda used during

the 1930s & 1940s was noted by the Lettrists and their core tenent was an

understanding of the letter itself as the fundamental building block of art and

literature. Often they would break down language

into letters or phonetic sounds, assessing and deploying them for their aesthetic

or auditory qualities rather than their conventional meaning(s). In that sense the Lettrists can be seen as

something as precursor of post-modernism’s later “everything is text” orthodoxy although that too has an interesting

origin. The French philosopher Jacques

Derrida (1930-2004) made famous the phrase “Il n'y a pas de hors-texte” which often is

translated as something like “there is no meaning beyond the text” but “hors-texte”

(outside the text) was printers’ jargon for those parts of a book without regular

page numbers (blank pages, copyright page, table of contents et al) and Derrida’s

point actually was the hors-texte must be regardes as a part of the text. There was much intellectual opportunism in

post modernism and for their own purposes it suited may to assert what Derrida said

was “There is nothing outside the text” and what he meant was “everything is

part of a (fictional) text and nothing is real” whereas his point was it’s not

possible to create a rule rigidly which delineates what is “the text” and what is “an appendage

to the text”. Troublingly for some post

modernists, Derrida did proceed on a case-by-case basis although he seems not to have explained how the meaning of the text in an edition of a book with an appended "This page is intentionally left blank" page might differ from one with no such page. It may be some earnest student of post-modernism has written an essay convincingly exactly that.

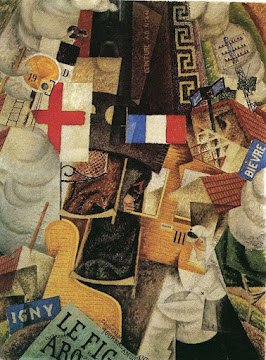

The Lettrism project was very much a rejection of traditional

language structures and the meanings they denoted; it was a didactic

endeavor, the Lettrists claiming not only had they transcended conventional

grammar & syntax but they could obviate even a need for meaning in

words, their work a deliberate challenge to their audiences to rethink how

language functions. As might be

imagined, their output was “experimental” and in addition to some takes on the

ancient form of “pattern poetry” included what they styled “concrete poetry”

& “phonetic poetry”, visual art and performance pieces which relied on abstraction,

the most enduring of which was the “hypergraphic”,

an object sometimes describe as “picture writing” which combined letters,

symbols, and images, blending visual and textual elements into a single art

form, often as collages or as graphic-like presentations on canvas or paper. This wasn’t a wholly new concept but the

lettrists vested it with new layers of meaning which, at least briefly,

intrigued many although it was dismissed also as “visual gimmickry” or that

worst of insults in the avant-garde: “derivative”. Despite being one of the many footnotes in

the history of modern art, Lettrism never went away and in a range of artistic

fields, even today there are those who style themselves “lettrists” and the

visual clues of the movement’s influence are all around us.

Chrysler’s letterism: The Chrysler 300 “letter series”

1955-1965.

The “letter series” Chrysler 300s were produced in

limited numbers in the US between 1955-1965; technically, they were the high-performance

version of the luxury Chrysler New Yorker and the

first in 1955 was labeled C-300, an allusion to the 300 hp (220 kW) 331 cubic

inch (5.4 litre) Hemi V8, then the most powerful engine offered in a production

car. The C-300 was well received and when

an updated version was released in 1956, it was dubbed 300B, the annual

releases appending the next letter in the alphabet as a suffix although in

1963 “I” was skipped when the 300H was replaced by the 300J, the rationale being it might be confused with a “1” (ie

the numeral “one”), the same reasoning explaining why there are so few “I cup”

bras, some manufacturers filling the gap in the market between “H cup” & “J

cup” with a “HH cup” but there’s no evidence the corporation’s concerns ever prompted

them to ponder a “300HH”.

Retrospectively thus, the 1955 C-300 is often described as the 300A

although this was never an official factory designation. While in the narrow technical sense not a

part of the “muscle car” lineage (defined by the notion of putting a “big”

car’s “big” engine into a smaller, lighter model), the letter series cars were

an important part of the “power race” of the 1950s and an evolutionary step in

what would emerge in 1964 as the muscle car branch and the most plausible LCA (last common ancestor) of both was the Buick Century (1936-1942). The letter series was retired after 1965 because the

market preference for high-performance car had shifted to the smaller, lighter,

pony cars & intermediates (neither of which existed in the early years of

the 300) though the “non letter series” 300s (introduced in 1962) continued until 1971 with an toned-down emphasis on speed and a shift to style.

1955 Chrysler C-300 (300A).

The 1955 C-300 typified Detroit’s “mix & match”

approach to the parts bin in that it conjured something “new” at relatively low

cost, combining the corporation’s most powerful Hemi V8 with the New Yorker

Series (C-68) platform, the visual differentiation achieved by using the front

bodywork (the “front clip” in industry jargon) from the top-of-the-range

Imperial. The justification for the existence of the thing was

to fulfill the homologation requirements of NASCAR (National Association for

Stock Car Auto Racing) that a certain number of various components be sold to

the public before a car could be defined as a “production” car (ie a “stock”

car, a term which shamelessly would be prostituted in the years to come) and used

in sanctioned competition. Accordingly,

the C-300 was configured with the 331 cubic inch Hemi V8 fitted, with dual four

barrel carburetors, solid valve lifters and a high-lift camshaft profiled for

greater top-end power. Better to handle

the increased power, stiffer front and rear suspension was used and it was very

much in the tradition of the big, powerful grand-touring cars of the 1930s

such as the Duesenberg SJ, something that with little modification could be

competitive on the track. Very

successful in NASCAR racing, the C-300 also set a number of speed records in

timed trials but it was very much a niche product; despite the price not being

excessive for what one got, only 1,725 were made.

1956 Chrysler 300B (left) and Highway Hi-Fi phonograph player (right).

The 300B used a updated version of the C-300s body so

visually the two were similar although, ominously, the tailfins did grow a

little. The big news however lay under

the hood (bonnet) with the Hemi V8 enlarged to 354 cubic inches (5.8 litres)

and available either with 340 horsepower (254 kW) or in a high- compression

version generating 355 (365), the first time a US-built automobile was

advertised as producing greater than one horsepower per cubic inch of

displacement. It was a sign of the

times; other manufacturers took note.

The added power meant a top speed of around 140 mph (225 km/h) could be attained,

something now to ponder given the retardative qualities of the braking system

but also of note was the season's much talked-about option: the "Highway Hi-Fi" phonograph player which allowed vinyl LP records to be played when the car was

on the move; the sound quality was remarkably good but on less than smooth

surfaces, experiences were mixed.

Success on the track continued, the 300B wining the Daytona Flying Mile

with a new record of 139.373 MPH, and it again dominated NASCAR, repeating the

C-300’s Grand National Championship.

Despite that illustrious record, only 1,102 were sold.

1957 Chrysler 300C.

The 1955-1956 Chryslers had a balance and elegance of

line which could have remained a template for the industry but there were other

possibilities and these Detroit choose to pursue, creating a memorable era of

extravagance but one which proved a stylistic cul-de-sac. The 1957 300C undeniably was dramatic and

featured many of the motifs so associated with the US automobile of the late

1950s including the now (mostly) lawful quad-headlights, the panoramic “Vista-Dome”

windshield, the lashings of chrome and, of course, those tailfins. The Hemi V8 was again enlarged, now in a

“tall deck” version out to 395 cubic inches (6.4 litres) rated at 375

horsepower (280 kW) and for the first time a convertible version of the 300 was available.

By now the power race was being run in

earnest with General Motors (GM) offering fuel-injected engines and Mercury

solving the problem in the traditional American (there’s no replacement for

displacement) way by releasing a 430 cubic inch (7.0 litre) V8 although it was so big and

heavy it made the bulky Hemi seem something of a lightweight; the 430 did however briefly

find a niche in in power-boat racing. For 300C owners who wanted more there was

also a high-compression version with more radical valve timing rated at 390

horse power (290 kW) and this was for the first time able to be ordered with a

three-speed manual transmission. Few

apparently felt the need for more and of the 2,402 300Cs sold (1,918 coupes &

484 convertibles), only 18 were ordered in high-compression form.

1958 Chrysler 300D.

Again using the Hemi 392, now tuned for a standard 380 horsepower

(280 kW), there was for the first time the novelty of the optional Bendix “Electrojector”

fuel injection, which raised output to a nominal 390 horsepower (290 kW) although

its real benefit was the consistency of fuel delivery, overcoming the starvation

encountered sometimes under extreme lateral load. Unfortunately, the analogue electronics of

the era proved unequal to the task and the unreliability was both chronic and insoluble, thus almost all the 21 fuel-injected cars were retro-fitted

with the stock dual-quad induction system and it’s believed only one 300D

retains its original Bendix plumbing.

Also rare was the take-up rate for the manual transmission option and

interestingly, both the two known 300Ds so equipped were ordered originally

with carburetors rather than fuel injection.

The engineers also secured one victory over the stylists. After testing on the proving grounds

determined the distinctive, forward jutting “eyebrow” header atop the windscreen

reduced top speed by 5 mph (8 km/h), they managed to convince management to

authorize an expensive change to the tooling, standardizing the convertible’s

compound-curved type “bubble windshield”, a then rare triumph of function over

fashion. Although the emphasis of the

letter series cars was shifting from the track to the roads, the things

genuinely still were fast and one (slightly modified) 300D was set a new class record

of 156.387 mph (251.681 km/h) on the Bonneville Salt Flats. Production declined to 810 units (619 coupes

& 191 convertibles).

1959 Chrysler 300E.

With the coming of the 1959 range, the Hemi was retired

and replaced by a new 413 cubic inch (6.8 litre) V8 with wedge-shaped

combustion chambers. Lighter by some 100

lb (45 kg) and cheaper to produce than the Hemi with its demanding machining

requirements and intricate valve train, the additional displacement allowed

power output to be maintained at 380 horsepower (280 kW) while torque

(something more significant for what most drivers on the street do most of the

time actually increased). The manual

transmission option was also deleted with no market resistance and despite the

lower production costs, the price tag rose, something probably more of a factor

in the declining sales than the loss of the much vaunted Hemi and, like the

300D (and most of the rest of the industry) the year before, the economy was

suffering in the relatively brief but sharp recession and Chrysler probably did

well to shift 390 units (550 coupes & 140 convertibles).

1960 Chrysler 300F (left) and 300F engine with Sonoramic intake in red (right).

Although the rococo styling cues remained, underneath now

lay radical modernity, the corporation’s entire range (except for exclusive

Imperial line) switching from ladder frame to unitary construction. The stylists however indulged themselves with

more external flourishes, allowing the tailfins an outward canter, culminating sharply in a point and housing boomerang-shaped taillights. Even the critics of such things found it a

pleasing look although they were less impressed by the faux spare tire cover (complete

with an emulated wheel cover!) on the trunk (boot), dubbing it the “toilet

seat”. The interior though was memorable

with four individual bucket in leather with a center console between extending

the cockpit’s entire length and there was also Chrysler’s intriguing

electroluminescent instrument display which, rather than being lit with bulbs,

exploited a phenomenon in which a material emits light in response to an

electric field; the ethereal glow was much admired.

Buyers in 1959 may have felt regret in not seeing a Hemi in the engine

bay, but after lifting the hood (bonnet) of a 300F they wouldn’t have been

disappointed because, in designer colors (gold, silver, blue & red) sat the

charismatic “Sonoramic” intake manifold, a “cross-ram” system which placed the

carburetors at the sides of engine, connected by long tubular runners. What the physics of this did was provide a

short duration “supercharging” effect, tuned for the mid-range torque most used

when overtaking at freeway speeds. Also

built were a handful of “short ram” Sonoramics which had the tubes (actually

with the same length) re-tuned to deliver top-end power rather than mid-range

torque. Rated at a nominal 400 (300 kW) horsepower,

these could be fitted also with the French-built Pont-a-Mousson 4-speed manual

transmission used in the Chrysler V8-powered Facel Vega and existed only for

the purpose of setting records, six 300Fs so equipped showing up at the 1960

Dayton Speed Week where they took the top six places in the event’s signature Flying

Mile, crossing the traps at between 141.5-144.9 mph (227.7-233.3 km/h). The market responded and sales rose to 1217 (969

& 248 convertibles) and the 300F (especially those with the “short ram”

Sonoramics) is the most collectable of the letter series.

1961 Chrysler 300G.

The 300G gained canted headlights, another of those

styling fads of the 1950s & 1960s which quickly became passé but now

seem a charming period piece. There

was the usual myriad of detail changes the industry in those days dreamed up

each season, usually for no better reason that to be “different” from last year’s

model and thus be able to offer something “new”. As well as the slanted headlights, the fins became sharper still and taillights were moved. Mechanically, the specification substantially

was unaltered, the Sonoramic plumbing carried over although the expensive,

imported Pont-a-Mousson transmission was removed from the option list, replaced

by Chrysler’s own heavy-duty 3-speed manual unit, the demand for which was

predictably low. The lack of a fourth

cog didn’t impede the 300G’s performance in that year’s Daytona Flying Mile

where one would again take the title with a mark of 143 mph (230.1 km/h) and to

prove the point a stock standard model won the one mile acceleration title. People must have liked the headlights because

production reached 1617 units (1,280 coupes & 337 convertibles).

1962 Chrysler 300H.

Perhaps a season or two too late, Chrysler “de-finned” its

whole range, prompting their designer (Virgil Exner (1909–1973)) to lament his creations

now resembled “plucked chickens”. For

1962 the 300 name also lost some of its exclusivity with the addition to the

range of the 300 Sport series (offered also with four-door bodywork) and to

muddy the waters further, much of what was fitted to the 300H could be ordered

as an option on the basic 300 so externally, but for the distinctive badge,

there was visually little to separate the two.

Mechanically, the “de-contenting” which the accountants had begun to

impose as the industry chase higher profits (short-term strategies to increase “shareholder

value” are nothing new) was felt as the Sonoramic induction system moved to the

300H’s option list with the inline dual 4-barrel carburetor setup last seen on

the 300E now standard. However, because

of weight savings gained by the adoption of a shorter wheelbase platform, the

specific performance numbers of 300H actually slightly shaded its predecessor but

the cannibalizing of the 300 name and the public perception the thing’s place

in the hierarchy was no longer so exalted saw sales decline 570 (435 coupes &

135 convertibles), the worst year to date.

The magic of the 300 name however seemed to work because Chrysler in the

four available body styles (2 door convertible, 2 & 4 door hardtop & 4

door sedan) sold 25,578 of the 300 Sport series, exceeding expectations. Since 1962, the verbal shorthand to distinguished between the ranges has been “letter series” and “non letter series” cars.

1963 Chrysler 300J.

Presumably in an attempt to atone for past sins, a spirit

of rectilinearism washed through Chrysler’s design office while the 1963 range

was being prepared and it would persist until the decade’s end when new sins

would be committed. Unrelated to that

was the decision to skip a 300I because of concerns it might be read as the

wholly numeric 3001. The de-contenting (now an industry trend) continued with the swivel feature for the front bucket seats deleted while full-length

centre console was truncated at the front compartment with the rear seat now a

less eye-catching bench. The 413 V8 was

offered in a single configuration but the Sonoramics were again standard and the

manual transmission remained optional, seven buyers actually ticking the box. The

300J was still a fast car, capable of a verified 142 mph (229 km/h) although

the weight and gearing conspired against acceleration but a standing quarter mile (400

m) ET (elapsed time) of 15.8 was among the quickest of the cars in its class. Still, it did seem the end of the series might

be nigh with the convertible no longer offered and the sales performance

reflected the feeling, only 400 coupes leaving the showrooms.

The BUFF: The new version of the Boeing B-52 Stratofortress (replacing the B-52H) will be the B-52J, not B-52I or B-52HH.

The US Air Force also opted to skip “I” when allocating a designation for the updated version of the Boeing B-52 Stratofortress (1952-1962 and still in service). Between the first test flight of the B-52A in 1954 and the B-52H entering service in 1962, the designations B-52B, B-52C, B-52D, B-52E, B-52F & B-52G sequentially had been used but after flirting with whether to use B52J as an interim designation (reflecting the installation of enhanced electronic warfare systems) before finalizing the series as the B-52K after new engines were fitted, in 2024 the USAF announced the new line would be the B-52J and only a temporary internal code would distinguish those not yet re-powered. Again, the “I” was not used so nobody would think there was a B521. Although the avionics, digital displays and ability to carry Hypersonic Attack Cruise Missile (HACM, a scramjet-powered weapon capable exceeding Mach 5) are the most significant changes for the B-52J, visually, it will be the replacement of the old Pratt & Whitney TF33 engines with new Rolls-Royce F130 units which will be most obvious, the F130 promising improvements in fuel efficiency of some 30% as well as reduction in noise and exhaust emissions. Already in service for 70 years, apparently no retirement date for the B-52 has yet been pencilled-in. In USAF (US Air Force) slang, the B-52 is the BUFF (the acronym for big ugly fat fellow or big ugly fat fucker depending on who is asking). From BUFF was derived the companion acronym for the LTV A-7 Corsair II (1965-1984, the last in active service retired in 2014) which was SLUFF (Short Little Ugly Fat Fellow or Short Little Ugly Fat Fucker).

1964 Chrysler 300K.

Selling in 1963 only 400 examples of what was intended as

one of the corporations “halo” cars triggered management to engage in what the

Americans had come to call an “agonizing reappraisal”. The conclusion drawn was the easiest way to stimulate demand was to

lower the basic entry price to ownership of the name and if buyers really

wanted the fancy stuff once fitted as standard, they could order it from an

option list; it was essentially the same approach as used for most of Chrysler’s

other ranges. Accordingly, the leather

trim and many of the power accessories joined air-conditioning on the option

list. The base engine was now running a

single four barrel carburetor although for and additional US$375, the Sonoramic

could be ordered and combined with Chrysler’s new, robust four-speed manual

transmission. Surprising some observers,

the convertible coachwork made a return to the catalogue. All that meant the 300K could be advertised

for US$1000 less than the 300J and the market responded in a text book example

of price elasticity of demand, production spiking to 3647 (3,022 coupes & 625

convertibles).

1965 Chrysler 300L (four speed manual).

Despite the stellar sales of the 300K, even before the

release of the 300L, the decision had been taken it would be the last of the

letter series. The tastes of those who

wanted high performance had shifted to the smaller, lighter pony cars and

intermediates which hadn’t even been envisaged when the C-300 had made its debut

a decade earlier. Additionally, the letter

series had outlived their usefulness as image-makers for the corporation now

they were no longer the fastest machines in the fleet and production-line rationalization

meant it was easier and more profitable to maintain a single 300 line and allow

buyers to choose their own combination of options; in other words, after 1965, it

would still be possible to create a letter series 300 in most aspects except

the badge and the now departed Sonoramics of fond memory. When the last 300L was produced it was

configured with a single four barrel carburetor and had it not been for the

badges, few would have noticed the difference between it and any other 300 with

the same body. The lower price though

continued to attract buyers and in its final year 2845 were sold (2,405 coupes

& 440 convertibles).