Cage (pronounced keyj)

(1) A boxlike enclosure having wires, bars, or the like,

for confining and displaying birds or animals or as a protective barrier for objects

or people in vulnerable positions (used in specific instances as battery cage, bird-cage,

birdcage, Faraday cage, tiger cage, fish cage etc).

(2) Anything that confines or imprisons; prison and figuratively,

something which hinders physical or creative freedom (often as “caged-in”).

(3) The car or enclosed platform of an elevator.

(4) In underground mining, (1) an enclosed platform for

raising and lowering people and cars in a mine shaft & (2) the drum on

which cable is wound in a hoisting whim.

(5) A general descriptor for any skeleton-like framework.

(6) In baseball (1) a movable backstop for use mainly in

batting practice & (2) the catcher's wire mask.

(7) In ice hockey and field hockey, a frame with a net

attached to it, forming the goal.

(8) In basketball, the basket (mostly archaic).

(9) In various sports which involve putting a ball or

other object into or through a receptacle (net, hole), to score a goal or

something equivalent.

(10) In fashion, a loose, sheer or lacy overdress worn

with a slip or a close-fitting dress.

(11) In ordnance, a steel framework for supporting guns.

(12) In engineering (1) various forms of retainers, (2) a

skeleton ring device which ensures the correct space is maintained between the

individual rollers or balls in a rolling bearing & (3) the wirework

strainers used to remove solid obstacles in the fluids passing through pumps

and pipes

(13) To put (something or someone) into some form of

confinement (which need not literally be in a cage).

(14) In underwear design, as cage bra, a design which

uses exposed straps as a feature.

(15) In computer hardware, as card cage, the area of a

system board where slots are provided for plug-in cards (expansion boards).

(16) In anatomy (including in zoology) as rib-cage, the

arrangement of the ribs as a protective enclosure for vital organs.

(17) In athletics, the area from which competitors throw

a discus or hammer.

(18) In graph theory, a regular graph that has as few

vertices as possible for its girth.

(19) In killer Sudoku puzzles, an irregularly-shaped

group of cells that must contain a set of unique digits adding up to a certain

total, in addition to the usual constraints of Sudoku.

(20) In aviation, to immobilize an artificial horizon.

1175–1225: From the Middle English cage (and the earlier forms kage

& gage), from the Old French cage (prison; retreat, hideout), from the

Latin cavea (hollow place, enclosure

for animals, coop, hive, stall, dungeon, spectators' seats in a theatre), the

construct being cav(us) (hollow) + -ea, the feminine of -eus (the

adjectival suffix); a doublet of cadge and related to jail. The Latin cavea

was the source also of the Italian gabbia (basket for fowls, coop). The noun (box-like receptacle or enclosure, with open

spaces, made of wires, reeds etc) typically described the barred-boxes used for

confining domesticated birds or wild beasts was the first form and form circa

1300 was used in English to describe "a cage for prisoners, jail, prison,

a cell". The noun bird-cage (also

birdcage) was in the late fifteenth century formed to describe a "portable

enclosure for birds", as distinct from the static cages which came to be

called aviaries. The idiomatic use as “gilded

cage” refers to a place (and, by extension, a situation) which is superficially

attractive but nevertheless restrictive (a luxurious trap) and appears to have

been coined by the writers of the popular song A Bird in a Gilded Cage (1900).

To “rattle someone's cage” is to upset or anger them, based on the reaction

from imprisoned creatures (human & animal) to the noise made by shaking

their cages. The verb (to confine in a

cage, to shut up or confine) dates from the 1570s and was derived from the

noun. The synonyms for the verb include crate,

enclosure, jail, pen, coop up, corral, fold, mew, pinfold, pound, confine,

enclose, envelop, hem, immure, impound, imprison, incarcerate, restrain & close-in. Cage is a noun, verb and (occasional) adjective, caged & caging are verbs (used with object) and constructions include cage-less, cage-like, re-cage; the noun plural is cages.

Wholly unrelated to

cage was the adjective cagey (the frequently used derived terms being cagily

& caginess), a US colloquial form meaning “evasive, reticent”, said to date

from 1896 (although there had in late sixteenth century English been an earlier

cagey which was a synonym of sportive (from sport and meaning “frolicsome”)). The origin of the US creation (the sense of

which has expanded to “wary, careful, shrewd; uncommunicative, unwilling or

hesitant to give information”) is unknown and despite the late nineteenth

century use having been attested, adoption must have been sufficiently hesitant

not to tempt lexicographers on either side of the Atlantic because cagey

appears in neither the 1928 Webster’s Dictionary nor the 1933 supplement to the

Oxford English Dictionary (OED). John Cage (1912–1992) was a US avant-garde composer who,

inter alia, was one of the pioneers in the use of electronic equipment to

create music. He’s also noted for the 1952

work 4′33″ which is often thought a

period of literal silence for a duration of that length but is actually designed

to be enjoyed as the experience of whatever sounds emerge from the environment (the

space, the non-performing musicians and the audience). It was an interesting idea which explored

both the definitional nature of silence and paralleled twentieth century exercises

in pop-art in prompting discussions about just what could be called "music".

The related forms jail and gaol are of interest. Jail as a noun dates from circa 1300

(although it had by then been used as a surname for at least a hundred years) and

meant "a prison; a birdcage".

It was from the Middle English jaile,

from the Old French jaiole (a cage; a

prison), from the Medieval Latin gabiola

(a cage (and the source also of the Spanish gayola

and the Italian gabbiula)), from the Late

Latin caveola, a diminutive of the Latin

cavea. The spellings gaile & gaiole were

actually more frequent forms in Middle English, these from the Old French gaiole (a cage; a prison), a variant

spelling thought prevalent in the Old North French, which would have been the language

most familiar to Norman scribes, hence the eventual emergence of gaol which

emerged under that influence. It’s long been

pronounced jail and the persistence

of gaol as the preferred form in the UK is attributed to the continued use in

statutes and other official documents although there may also have been some

reluctance to adopt “jail” because this had come to be regarded as an Americanism.

The Mortsafe

A mortsafe.

The

construct was mort + safe. Mort was from

the Middle English mort, from the Old

French mort (death). Safe was from the Middle English sauf, safe, saf & saaf, from the Old French sauf, saulf & salf (safe), from the Latin salvus

(whole, safe), from the Proto-Italic salwos,

from the primitive Indo-European solh-

(whole, every); it displaced the native Old English sicor (secure, sure). In the

case of “mortsafe”, the “mort” element was used in the sense of “corpse; body

of the dead”). The “safe” element can be

read either as a noun (an enclosed structure in which material can be secure

from theft or other interference) or verb (to make something safe). For its specific purpose, a mortsafe wholly

was analogous with other constructions (meatsafe, monesafe etc).

Popular in

the UK in the eighteenth & nineteenth century, mortsafes were structures placed

over a grave to prevent resurrectionists (now better remembered as “body-snatchers”

or “grave-robbers”) from exhuming the corpse or stealing any valuables which

may have been interred with the dead. The

companion term was morthouse which was a secure facility in which bodies were

kept for a period prior to burial (obviating the need for a mortsafe). The noun “resurrectionist” was later

re-purposed to describe (1) a believer in a future bodily resurrection, (2) one

who revives (more often “attempts to revive”) old practices or ideas (3) one

who (for profit or as a hobby) restores or reconditions objects) and (4) in the

humor of the turf, a racehorse that mid-course recovers its speed or stamina. Fashioned usually of wrought iron (sometimes

in combination with concrete slabs), those which were hired or leased for only

a few weeks usually were secured by the design including pile-like extensions

driven into the ground while those permanently installed were “concreted

in”. The tradition of burying the dead

with valuables has a long history (the best known example being the tombs of

the pharaohs (supreme rulers) of Ancient Egypt) and although in the eighteenth

century UK any treasure likely to end up in coffins was by comparison modest,

items like wedding rings or other jewellery sometimes were included. The body-snatcher trade existed because there

was demand from medical schools which needed a fair number of fresh cadavers

for anatomical study and student instruction.

Demand: Anatomische les van dr. Willem Röell

(1728), (Anatomy lesson by Dr Willem Röell (1700-1775)), oil on canvas by

Cornelis Troost (1697-1750), Amsterdam Museum.

The Enlightenment (which appears

in history texts also as the “Age of Reason”) was the period Europe which

created the a framework for modernity. Beginning

late in the seventeenth century, it was an intellectual and cultural movement which

sought to apply reason and scientific rigor to explore or explain. Throughout the eighteenth century the

Enlightenment spread throughout Western Europe, the Americas and much of the territory

of European empires, brining ideas of individual liberty, religious tolerance

and the concept of systematic scientific investigation. Superstitions didn’t vanish as the

Enlightenment spread truth, but was increasingly marginalized to matters where

proof or disproof were not possible. One

of the benefits of the Enlightenment was the expansion of medical education

which was good (at least sometimes) but it also created a demand for fresh

corpses which could be used for dissection, the quite reasonable rationale

being it was preferable for students to practice on the dead rather than the

living; in the pre-refrigeration-age, demand was high and, during the instructional

terms of medical schools, often constant.

The Enlightenment didn’t change the laws of supply and demand and entrepreneurial

commerce was there to provide supply, the resurrectionists undertaking their ghoulish

work usually under cover of darkness when cemeteries tended to be deserted.

Supply: Resurrectionists at work (1887),

illustration by Hablot Knight Browne (1815–1882) whose work usually was

credited to his pen-name "Phiz".

Ghoul was from the French goule, from the Persian غول (ġul), from the

Arabic غُول (ḡūl) and in mythology, ghouls were demons

from the underworld who at night visited the Earth to feast on the dead. It was an obvious term to apply to grave-robbers

although for generations their interests tended to be in the whatever valuables

might be found and it was only later “specialists” came to be known as “body-snatchers”,

a profession created by corpses becoming commodities. By extension, in the modern era, those with a

disturbing or obsessive interest in stuff to do with the death or dying came to

be labelled “ghouls” and their proclivities “ghoulish”. Mortsafes were a usually effective deterrent to

body-snatching and some have survived although they were in the eighteenth

century more common than those few would suggest. While wealthy families paid for permanent

structures, many were leased from cemeteries or ironmongers for only the short

time required before the processes of decay and putrefaction rendered a corpse

no longer a tradeable commodity. Sturdy

and durable, ex-lease mortsafes were recycled for the next burial. Despite the Enlightenment, rumors did still

spread the mortsafes were there to prevent keep the undead from rising to again

walk the earth but genuinely they were there to keep others out, not the

deceased in. Still, the idea has

potential and were crooked Hillary Clinton (b 1947; US secretary of state

2009-2013) to die (God forbid), some might be tempted to install a mortsafe atop

her grave so she can’t arise…just to be sure.

Turreted

watchtower (1827), Dalkeith Cemetery, near Edinburgh (photograph by Kim

Traynor).

In England, the Murder Act (1751)

had mandated the bodies of executed criminals could be deemed property of the

state and a supply for the training of surgeons thus existed but demand proved

greater. The solution of the authorities

was usually to “turn a blind eye” to activities of the grave-diggers (as long

as they restricted the trade to snatching the deceased working class) although

in Scotland which (as it does now) operated a separate legal system, there was

much public disquiet because, north of the border, there was great reverence

for the dead and among the population a widespread belief in resurrection (in

the sacred sense), the precepts of which included that the dead could not rise from

a bodily incomplete state. Body

snatchers were thus thought desecrationists and vigilantes formed into parties

to protect graveyards and there were even fatalities as body-snatchers were

attacked. In Scotland, so seriously was

the matter taken there were graveyards with permanent stone structures (“watch-towers”

or “watch-houses”) to house the “watchers”, volunteer organizations (which,

depending on the size of the town could be over a thousand-strong) with rosters

so shifts were available to watch over the site. Reputedly, especially entrepreneurial

suppliers of demand solved the problem of interference by the authorities or “concerned

citizens” by “cutting out the middle man” (as it were), murdering tramps,

vagrants and such to be supplied to surgeons trusted not to ask too many

questions. The legislative response in

the UK was the Anatomy Act (1832,

known as the “Warburton Anatomy Act”)

which made lawful the donation of dead bodies to those “authorized parties”

(surgeons, researchers, medical lecturers and students) licence to dissect; this

was the codified origin of the notion of “donating one’s body to science”, the

modern fork of which is the “organ donation” system. With the passage of the 1832 act, supply soon

exceed demand with it becoming (in some circles) fashionable to include in one’s

will a clause “donate my body to science” while some families, in the spirit of

the Enlightenment anxious to assist the progress of medical science made the

gesture while others wished just to avoid the expense of a funeral.

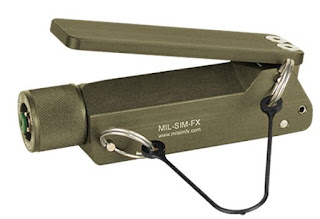

The cage bra

The single strap cage bra.

A cage bra is built with a harness-like structure which

(vaguely) resembles a cage, encapsulating the breasts using one or more straps.

Few actually use those straps

predominately to enhance support and the effect tends to be purely aesthetic,

some cage bras with minimal (or even absent) cup coverage and a thin band or multi-strap

back. Designed to be at least partially seen and admired, cage bras can

be worn under sheer fabrics, with clothes cut to reveal the construction or even (in elaborated form and often on red carpets) worn alone, the effect borrowed from engineering or architecture where

components once concealed (air conditioning ducting, plumbing, electrical

conduits etc) deliberately are exposed. It’s

thus a complete reversal of the old rule in which the sight of a bra strap was

a fashion-fail. The idea has been

extended to sports bras which anyway have long often used additional, thick

straps to enhance support and minimize movement, especially those induced by

lateral forces not usually encountered in everyday life.

Lindsay Lohan in harness cage bra with sheer cups and matching

knickers.

The cage bra's salient features include: (1) the

straps which are

a cage’s most distinctive feature. The most

simple include only a single additional strap across the centre while others

have a pair, usually defining the upper pole of each cup. Beyond that, multiple straps can be used,

both at the front and back, some of which may have some functional purpose or

be merely decorative. Single strap cage

bras are often worn to add distinctiveness to

camisoles while those with multiple

straps are referred to as the harness style and have the additional benefit (or

drawback depending on one’s view) of offering more frontal coverage, the straps

sometimes a framework for lace or other detailing; this is a popular approach

taken with cage bralettes.

Front and back views of modestly-styled criss-cross cage bras.

(2) Many cage bras are constructed around a traditional

back band, especially those which

need to provide lift & support while those (usually with smaller cups) have

a thin band (merely for location) or none at all. In this acknowledgement of the laws of physics,

they’re like any other bra. Those with a

conventional back band (both bras and bralettes) are often constructed as the

V-shaped cage, the symmetrical straps well suited to v-necks or even square

necks and paired with cardigans or more structured jackets or blazers, they’re currently

the segment's best-sellers. A more

dramatic look is the criss-cross cage but fashionistas caution this works well

only in minimal surroundings so accessories should be limited to earrings or

stuff worn on the wrist or beyond.

Example of the cage motif applied to a conventional bra, suitable for larger sizes.

(3) As a general principle, the cage bras manufactured

tend to be those with cup sizes in

the smaller range, supply reflecting the anticipated demand curve. However, even the nominal size (A, B, C etc) of

the cups of cage bras can be misleading because they almost always have less

coverage than all but the most minimal of those used by conventional bras and should

be compared with a demi cup or the three-quarter style of plunge bras. That said, there are strappy designs which

include molded cups with underwires suitable for larger sizes but it’s a niche market and the

range is limited, the scope for flourishes being limited by the need to preserve functionality, a demand which, all else being equal, tends to increase with as mass grows. Unlike the structural underwire, many of the "underwireish" parts of a cage bra purely are decorative.

Examples of designs used to fabricate harness cage bras which can be worn under or over clothing or, in some cases, to augment a more conventional bra or bralette.

(4) Despite the specialized nature of cage bras, some are

multi-purpose and include padding

with all the usual advantages in concealment and additional volume, permitting

use as an everyday garment rather than one used exclusively for display. Some include removable padding so the bra can

be transformed into a see-through design.

(5) The methods of closure

type vary. The most uncompromising

designs actually have no closure mechanism; the idea being one would detract

from the purity of the lines so this requires the wearer to pull it over the

head; to be fashionable, sometimes there's a price to be paid. Other types use both front and

back closures, usually with conventional hook & clasp fittings (so

standard-sized extenders can sometimes be used) but there are some which borrow

overtly from the traditions of BDSM underwear (the origin of the cage bra motif)

and use extravagantly obvious buckles and even the occasional key-lock. The BDSM look is most obviously executed in

the choker cage bra which includes a neck choker as a focal point to accentuate

the neck and torso, something best suited to a long, slender neck. Buyers are are advised to move around when

trying these on because the origins of the BDSM motif lay in devices used in

Medieval torture routines so a comfortable fit is important.

Cage bralette.

(6) Almost all cage bras continue to use the same materials as conventional garments, the

fabrics of choice being nylon or spandex, their elasticity permitting some adjustments

to accommodate variations in shape or location. Sometimes augmented with lace, fabric, mesh or

metal rings, straps can also be made from leather.

Singer Ricki-Lee Coulter (b 1985, left) in a (sort of) dress with an illusion panel under the strappings which may be compared with an illusion bra (right).

(7) The cage and the illusion. The illusion industry variously exchanges and borrows motifs. Used by fashion designers, the illusion panel is a visual trick which to some extent mimics the appearance of bare skin. It’s done with flesh-colored fabric, cut to conform to the shape of wearer and the best known products are called illusion dresses although the concept can appear on other styles of garment. Done well, the trick works, sometimes even close-up but it’s ideal for photo opportunities. Because cage bras use a structure which can recall the struts used in airframes or the futtocks which are part of nautical architecture, they're an ideal framework for illusion panels.